F051 AI in healthcare 5/6: Decision support for stroke therapy (Michelle Livne, Vince Madai)

Most AI systems today are developed to aid diagnostics, not influence treatment choices. Compared to diagnosis support tools, which are based on existing diagnostic images, treatment outcomes predictions, and hence therapy suggestions are more complex. They demand more complex clinical trials.

AI support systems are in general in the early stages of clinical validation. "So we are, for example in oncology, dealing with unvalidated precision medicine approaches at the moment," says Vince Madai, MD, PhD in Neuroscience, working as a researcher at University Medical Center Charité in Berlin. In the coming years, more and more AI algorithms will be clinically validated and used in clinical practice. However, healthcare will probably always lag behind other industries - because of its complexity and safety demands, says Vince Madai. Madai is the Scientific Lead of the Prediction 2020 project at Charité.

The project's team is developing AI-based clinical decision support to assist the treating neurologists in treatment stratification. Personalized treatment strategies are not available but could, in time, be with the opportunities of digitization.

Data enables the development of precision medicine, which is, in essence, the development of subgroups of patients to get to the most precise advice for an individual.

According to the Internet Stroke Center, each year approximately 795,000 people suffer a stroke.

About 600,000 of these are first attacks, and 185,000 are recurrent attacks.

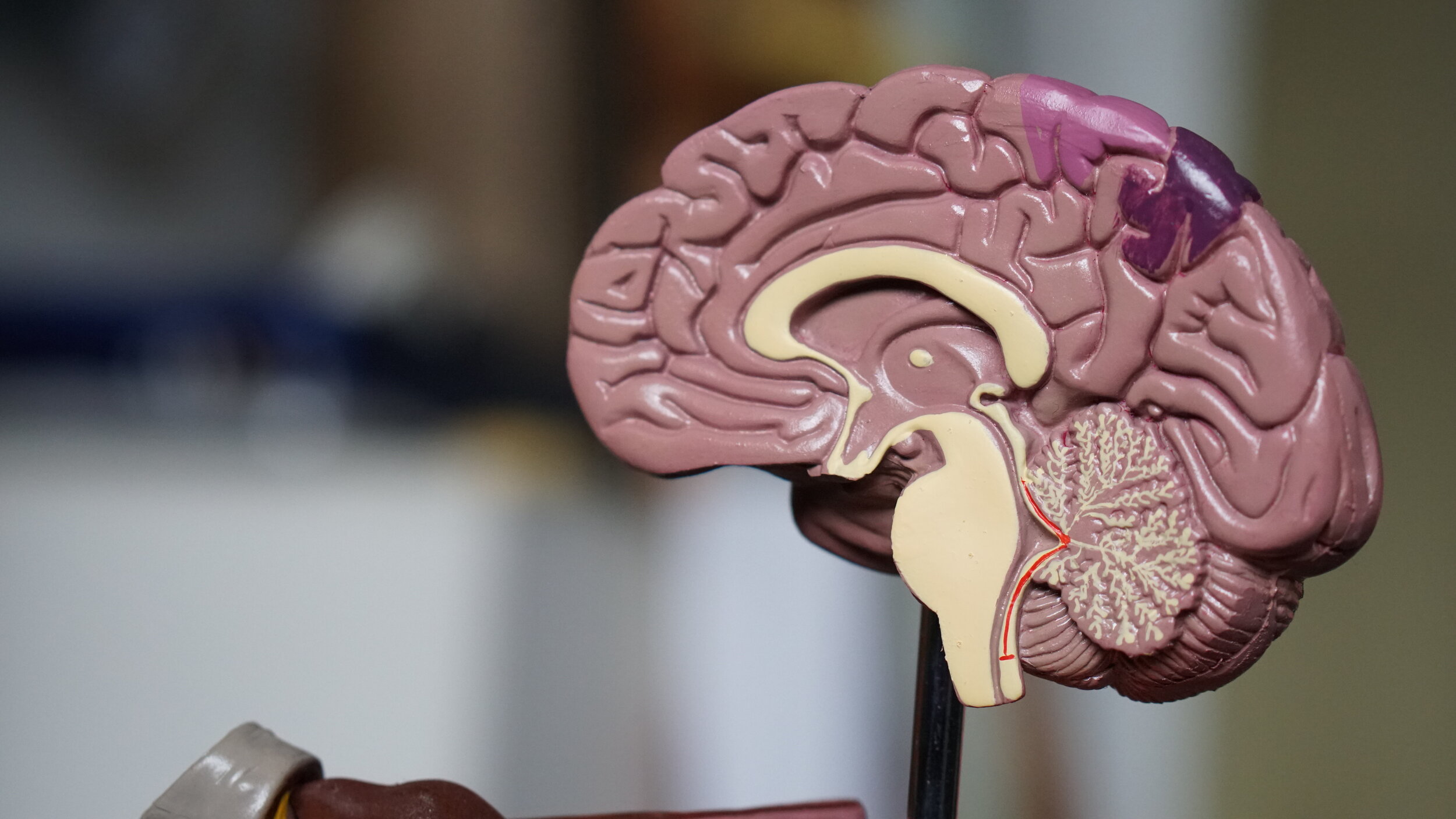

Generally speaking, there are two types of stroke. The first is caused by a sudden lack of blood supply in a certain brain area. This can happen due to a brain bleed causing the tissue to die. The second type of stroke, called ischemic stroke, is caused by a blood clot blocking that blocks an artery. When a patient reaches a hospital, neuroimaging is performed to see if the stroke is caused by a bleed or a blood clot.

Treatment

Within the first few hours, doctors need to get rid of the blood cloth - either with clot-busting drugs or with never approaches with a medical device (mechanical thrombectomy), explains Vince Madai.

Decision support systems can help in two ways:

help doctors get to the decision faster,

help with selecting the right patients for the right treatment, making treatments increasingly personalized.

Prediction 2020, says Michelle Livne, PhD in Machine Learning, expert for machine learning at the project, combines all the data about a patient gathered in the emergency room. Treating neurologist then receives suggestions about different treatments and calculated outcomes for a specific patient. The project is still in the development phase, awaiting clinical validation.

Clinical validation is a general challenge for AI support systems development. "In the end, these AI systems are just tools; looking at the underlying medicine is what's important. Especially in oncology, the validation of preliminary results is often not done. This is why, at the moment, the field suffers from unvalidated precision medicine approaches," says Vince Madai, who predicts it will take from three to five or more years before support systems will become part of the clinical practice.

Even if a patient is having a stroke, it might not be seen on a CT scan for several reasons.

How does this affect the development of AI systems?

Michelle Livne and Vince Madai.

"Many things can be invisible for the human eye, and the algorithm can detect more sophisticated patterns," says Michelle Livne. Another reason stroke would not be seen in images would be that it was a mild stroke. "It could also happen that a stroke would not be visible during first imaging, but only later. However, this argues for a very recent onset, which is just another important pattern an AI system needs to capture,” explains Vince Madai. However, in precision medicine tools in development, as is Prediction 2020, images are not the only data points used for predictions. "We try to leverage every data available at the decision making. Images and clinical data," says Michelle Livne.

Do we need to understand how AI works?

With the new wave of AI development, which is possible due to stronger computing power and general advancements in data gathering, one of the debates in the AI community is the importance of interpretability. Interpretability refers to the capability of researchers to explain how an AI model came to a specific conclusion. Some argue that as long as AI systems provide better than existing results, how they work does not matter. Vince Madai argues differently: "Performance is not the only issue. Doctors do not want black boxes. Which brings us to another issue - explainability. Because of the complexity of AI, we will need to trust our regulators to demand interpretability standards from developers. And service providers will need to take care of how to present results to the users, so the users will understand how systems work."

Interpretability is under exploration. With image analysis and convolutional neural networks (CNN), scientists are, among other things trying to understand as far as which part of an image was informational.

But in order to enable good AI development, it is also important that medical professionals and programmers understand each other. Vince Madai warns that languages of programmers and doctors differ to an extent, where doctors with AI knowledge will play a crucial role in the future. They will work as translators between both fields, to enable faster progress.

Ethical dilemmas

AI development is surrounded by ethical dilemmas. One of them is data bias - data from Germany might not be applicable to other continents. The other big issue is data privacy. It is very hard to acquire data in Germany and Europe in general. "In my opinion, there was never a fair discussion in public in the German or European public, about how increased data privacy hinders development of tools that could save people's lives. That is an ethical dilemma - data privacy vs. the public good. If the discussion happens, and people say we want data privacy, not the better systems, that's fine. But I don't see that discussion happening in a fair way," says Vince Madai.

AI literacy is another issue. A very small community of people fully understands AI. "Most doctors don't understand the complexity of AI, and without understanding, you can't have a public discussion," mentions Vince Madai. A similar problem prevails in other fields, comments Michele Livne, adding that in the end, the important part is for people to understand implications and rely on public bodies to take care of the greater good.

Tune in for the full discussion:

Some questions addressed:

Signs of strokes are well known: numbness in the arms, problems with speaking fluently. The brain is not getting enough blood. Someone calls an ambulance. What happens when a patient reaches the hospital?

How many types of strokes are there?

Time is crucial in stroke treatment - what are the current support systems available to doctors when a patient hit by stroke is brought to them? What kind of systems are in development?

Even if you are having a stroke, it might not be seen on a CT scan. A lot of AI at the moment is based on pattern recognition. If there is nothing visible on a CT - what does this mean for the development of AI supported decision support systems?

One of the discussion topics in AI is interpretability. Complex models are harder to understand and the more accurate an AI model is, the less interpretable it is. For example, a decision tree is easily interpretable, but has lower accuracy, compared to deep neural networks, that have higher accuracy and lower interpretability. Why is that important?

Opinions are divided between Yes, interpretability is needed and No, interpretability is not needed if the network proved to be effective. Where do you stand on that?

A lot of companies are working on AI, but most of the development and testing ATM happens with retrospective studies. How big of an issue is in your view lack of clinical studies done on patients? What does this mean in terms of time needed for AI support systems to come to regular clinical practice if everything needs to be validated through clinical studies which take years to finalize?

If you wanted to apply your knowledge on another field in healthcare - what could be the next frontier you could focus on that is closest to stroke research?